Accessibility Tools

PMG Digital Made for Humans

Exporting Google Analytics 4 Event Data to Google BigQuery

11 MINUTE READ | July 25, 2023

Exporting Google Analytics 4 Event Data to Google BigQuery

Neil Barnes

Neil Barnes has written this article. More details coming soon.

One of our favourite new features of Google Analytics 4 (GA4) is the export of raw GA4 event data into Google’s data warehousing product, Google BigQuery.

This feature is new for many of us as, in its previous life in Universal Analytics, it was restricted to customers on the 360 suite.

With this feature now available to all users of GA4, it opens up new possibilities for the use and analysis of your GA4 data, which we will explore in more detail in this guide.

There are four benefits of exporting GA4 data to Google BigQuery:

By setting up the GA4 data export to Google BigQuery, you are creating a back-up of your web analytics dataset that sits completely outside of the GA4 interface. In previous versions of Google Analytics, the closest you could get to a back-up would be to create an unfiltered view within the interface.

Whilst this served a purpose of providing a back-up within the interface itself, it was never a perfect solution. If the Google Analytics interface was ever offline or compromised, then there was no alternative way to view data. Furthermore, GA4 no longer has views, which adds a further complexity if you want to retain a back-up view of data within the interface.

With the export solution to Google BigQuery now available to all in GA4, it allows your data back-up to be stored completely outside of the GA4 interface.

The export from GA4 to Google BigQuery provides you a complete log of the events you are capturing.

The raw underlying event data is exported from the GA4 interface, and is stored in tables in Google BigQuery. Within Google BigQuery the raw data can be queried and analysed however you like, giving vast flexibility.

In previous versions of Google Analytics, access to raw data was either not available, or restricted to customers on the 360/premium version. By democratising the access of raw event data to all, it allows businesses big and small to deliver competitive advantage through connected measurement.

When you export the GA4 data to Google BigQuery, your business becomes the owner of the Google BigQuery dataset, which again is advantageous as it provides greater flexibility.

One common use case for exporting the raw GA4 event data to Google BigQuery is to join and integrate with other datasets to get a more holistic view of marketing performance.

For example, a lead generation business could export their raw GA4 event data to Google BigQuery and then integrate this dataset with their CRM (using a join key such as the client id) so performance can be measured and optimised to the final sale as opposed to the website lead.

eCommerce businesses could export their raw GA4 event data to Google BigQuery and then integrate this dataset with their CRM (using a join key such as the client id) to analyse the impact of marketing activity across the lifetime value of a customer, instead of a single purchase.

Yes, when data is collected into Google BigQuery, you will be charged based on both storage and processing of data (e.g. via Structured Query Language (SQL) querying).

These charges will differ based on location and region, and a handy resource for understanding these costs can be found here.

Storage costs can be broken down into two categories:

Active storage: This is for tables in Google BigQuery that have been modified in the last 90 days.

Long-term storage: This is for tables in Google BigQuery that have not been modified in the last 90 days.

At the time of writing, active storage costs for the UK is $0.023 (£0.018) per gigabyte per month. Long-term storage is $0.016 (£0.012) per gigabyte per month. All instances of Google BigQuery receive a free allowance of 10GB of storage per month.

Storage costs in Google BigQuery are relatively low, even if you have many terabytes of data.

The most common processing cost in Google BigQuery will be when you run SQL queries to extract data for further analysis.

All Google BigQuery instances have an allowance of 1 terabyte (1,024 GB) of processing per month. The cost beyond this free allowance is, for the UK, $6.25 (£4.84) per terabyte per month, at the time of writing.

Processing costs will tend to be higher relative to storage costs, particularly where raw data in Google BigQuery is regularly being queried for further analysis.

Whilst storage costs should be relatively easy to manage, processing costs in Google BigQuery can be difficult to manage effectively, particularly if multiple users are running queries regularly, or queries being run are inefficient leading to unnecessary costs.

Google BigQuery offers several safety measures to help control costs, which can be applied at the project or user level.

Costs can be managed by creating custom quotas that specify a limit on the amount of data that can be processed each day.

When the quota set has been exceeded, the query will return an error message confirming this and the query will need to be re-run the following day once the quota has been reset.

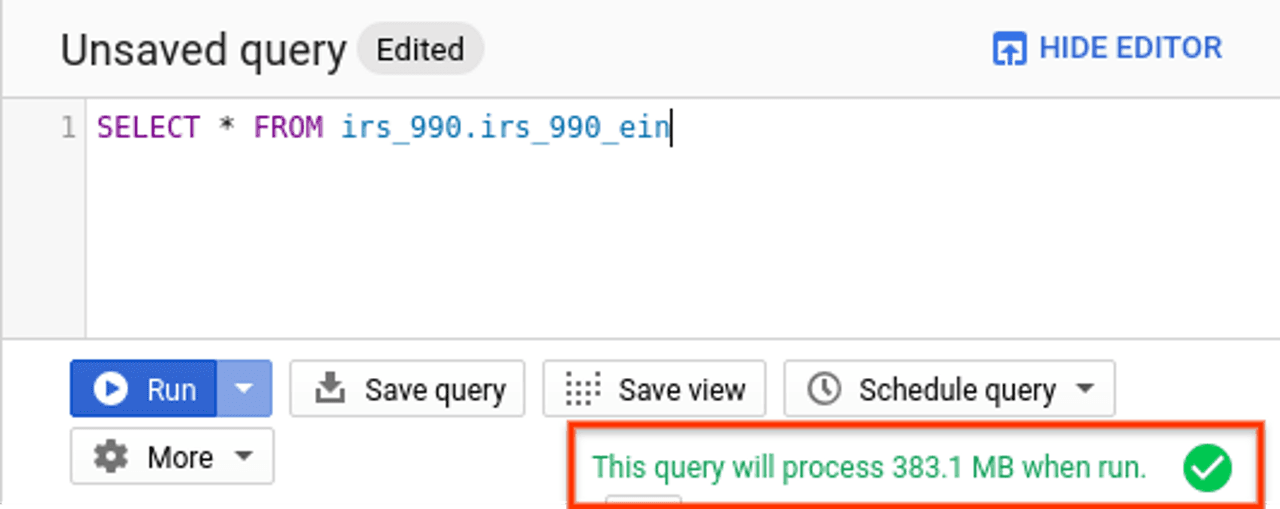

When creating a query in the Google Cloud console, the query validator verifies the query syntax and will provide an estimate of the size of the query being processed before the query is run/processed.

This is a very useful resource to understand the size of your query being run, and to test potential refinements to your SQL query to reduce size prior to running a query.

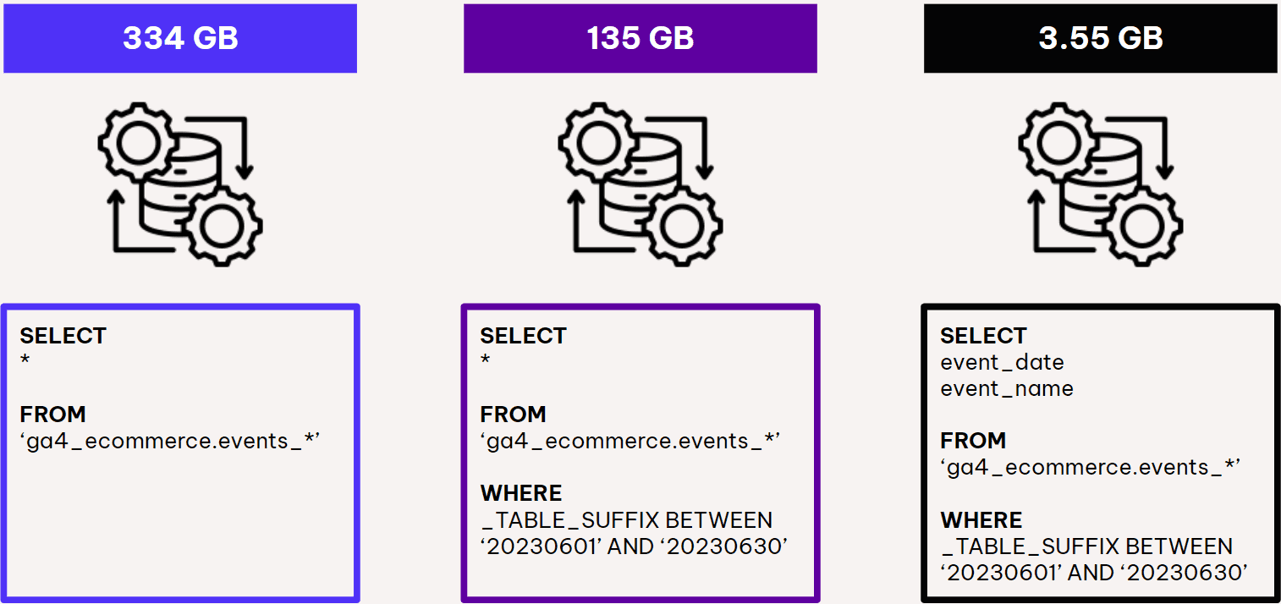

The configuration of your SQL query could have a significant impact on the amount of processing required.

Processing size is based on total data processed in the columns you have selected in your query. There are two common errors that we see which lead to inefficient queries

Including columns that are not needed for analysisFor example a query may use the SELECT * notation, which selects every column in the dataset, but the end analysis being completed only uses a subset of these columns. This leads to wasted processing and unnecessary costs. Making the query more efficient (by stating the required columns in the SELECT command) will significantly reduce processing volume, resulting in reduced costs.

Using functions such as LIMIT to try and save costsUsing functions such as LIMIT merely reduces the output of your query. Functions like LIMIT are not shortcuts to save costs on querying. The processing volume is exactly the same regardless of what you set LIMIT to.

For example a query with LIMIT 1 and LIMIT 10000000 would take the same amount of processing and would cost the same.

The below example demonstrates how a query can be optimised significantly by refining the SQL query.

At this point of reading our guide, you may be sold on the opportunities of warehousing your raw GA4 event data in Google BigQuery, but would like to ‘try before you buy’.

Google BigQuery offers a sandboxed environment, which can be linked to publicly available datasets, to allow you to become familiar with querying data in Google BigQuery using SQL.

The publicly available datasets include a GA4 dataset provided by Google. This is incredibly useful to learn and understand the use of raw GA4 data in Google BigQuery.

The sandboxed Google BigQuery instance can be created without adding a credit card or billing details. The sandboxed instance will permanently delete any tables you create after 60 days, but does have the same free usage allowance for storage (10GB per month) and processing (1TB per month) meaning ample opportunity to learn the capabilities of Google BigQuery in a safe environment.

Setting up the data export from GA4 to Google BigQuery requires two steps to be completed which are explained below.

Navigate to the Google API cloud console, enable the Google BigQuery API and review and agree to the terms of service.

Remember to provide billing details to upgrade from the sandboxed version.

Create a project ready to store your GA4 event data in.

Your permissions will need to be at the owner level to facilitate the data export.

You will need edit level permissions on the GA4 property to facilitate the data export.

Navigate to the admin panel and select ‘BigQuery Linking’.

Click ‘Link’ and choose the project you wish to link to.

It is important you set the data location right, as this isn’t easy to change once set-up. Choose the location you want to run queries from (e.g. London).

The daily frequency option is a full export of your GA4 data that happens once a day, once GA4 has processed all data for that day.

The streaming frequency creates an intraday table for the current day, meaning near real-time data is available for analysis. Note that streaming isn’t available in the sandboxed version of Google BigQuery and the streaming options incurs additional costs of $0.05 (£0.039) per gigabyte.

Review settings and click submit to create the link. It will take 24 hours for data to start appearing in your Google BigQuery instance.

The export schema is available here for reference.

The data that you see and analyse within the GA4 interface isn’t strictly the raw data. It is subject to various modelling and processing before it reaches the reports you view in the GA4 interface (for example, conversion modelling, inclusion of Google Signals and application of any property level data filters).

Google has produced a great article here which explains these concepts in more detail. The key points to be aware of have been summarised below:

The ‘users’ metric that you see in the pre-built reports in GA4 is not total users, but instead active users. An active user is defined as a user who has an engaged session.

When you run a user count in the Google BigQuery dataset, this is based on total users and will return a larger number as it will also include users who had a session that was defined as not engaged.

The metrics that you see and analyse in the GA4 interface are estimated using an algorithm called HyperLogLog++ to ensure a balance of speed of running reports vs accuracy of data.

When analysing the raw GA4 data in BigQuery, this will not be subject to the HyperLogLog++ algorithm and will return slightly different results.

High cardinality

Dimensions with high cardinality (i.e. a large number of unique values) can be grouped into a (other) row in the GA4 interface where a report exceeds the cardinality limit for the underlying table.

The raw GA4 data in Google BigQuery will not be impacted by cardinality, meaning potential differences in the output of data.

Google Signals

If you have activated Google Signals in your GA4 configuration, then this information will be available within the GA4 interface, but will not be available in the Google BigQuery data export.

Google Signals helps to consolidate user counts across different browser and device types for Google signed-in users, which will create differences in the data when compared to the Google BigQuery export.

When Google consent mode is activated, data in the GA4 interface is modelled for those who deny consent.

Whilst the cookieless pings are available in the raw Google BigQuery dataset, this dataset has no modelling applied to it, therefore there will be expected differences between the two datasets.

The raw data export of GA4 event data into Google BigQuery is one of our favourite features of GA4.

Implementing this feature will:

Provide a back-up of your GA4 dataset that sits outside of the GA4 interface.

Unlock access to the underlying raw event data, opening up new opportunities to deliver competitive advantage through advanced analysis.

Create a Google Analytics dataset that you have full ownership and control over.

Provide a solution to integrate GA4 event data with other sources (e.g. CRM), allowing a more holistic view of marketing performance beyond the initial conversion point.

Google BigQuery will incur costs in the form of storage and processing (i.e. running SQL queries).

Carefully consider how you run SQL queries in Google BigQuery, and put governance measures in place to keep a control on costs.

Use the sandboxed Google BigQuery instance to become familiar with the raw GA4 data structure, and to be comfortable in running SQL queries.

Sandboxed instances of Google BigQuery can be connected to publicly available datasets, with the same free processing limits (1TB per month) giving ample bandwidth to run SQL queries on GA4 data in a safe environment.

The data in the GA4 interface is subject to modelling and transformation through integrations such as Google Signals and Google Consent Mode.

The data collected in Google BigQuery is in its raw form and has no modelling applied to it. Therefore it is normal to expect small differences when comparing the output of the data in the GA4 interface vs the output from a SQL extract in Google BigQuery.

Stay in touch

Bringing news to you

Subscribe to our newsletter

By clicking and subscribing, you agree to our Terms of Service and Privacy Policy

A version of this article was originally published on RocketMill's website.